tl;dr my latest project is a customer support chatbot, you can take it for a spin here:

Jo-bot link here

💥 You can ask it questions about Tesco Online Grocery, like do you accept Clubcard online?, can I change my delivery slot? etc. (see full question and answer set here)

Below I give some background to the project, the courses that helped me learn the approach, and some notes on what’s happening under the hood. Also, a shout-out to my good friend, Tal Segel, CEO of Helen Doron Connect. It was a coffee chat with him that lit the spark for this project.

Some background

Customer support chatbots … they’ve been around for years right, right? In some sense, yes, for example Amazon’s, which I remember using about 3 years ago and which did a good job of fielding my basic questions. At some point in the conversation, inevitably, it handed me over to a human to deal with a more complex question. (In case your’e wondering: I received the same item in the mail twice, and was wondering if I got to keep both. After making my case, they agreed 🎈.)

So what’s new now? There are two main factors at play:

With the release of GPT-3 at the end of 2022, blow-your-socks-off langauge capabilities are available to anyone who can code a few lines of Python. Additionally, the cost is invitingly low. In my chatbot application I used GPT-3.5 for about 2 weeks, and for processing about 500,000 words of text, it charged me just over a dollar. Very reasonable, if you ask me!

Whilst GPT contains virtually all aspects of human knowledge embedded within its neural network, it often gets facts wrong and it is unaware of very recent information (after its cut-off date of Septemer 2021). A modified approach is therefore needed. Instead of asking GPT directly for information, we feed it relevant facts - such as store opening hours or a refund policy - and using its language skills, it can match the customer question with the correct answer.

All of this opens up an opportunity for companies to improve their customer service. When done right, customers will be able to get answers to their questions immediately (aka Tier 1 support), with only the more complex ones being passed along to humans (Tier 2 and beyond).

Setting up a chatbot

It is surprisingly simple to set up a chatbot. I’m quite new to programming, but after watching a few hours of training videos, was more than ready to build a Proof of Concept version. The main framework to learn is LangChain, which lets you use the power of GPT (and other language models) within your applications. It simplifies lots of basic tasks like setting up a database to store the words and setences as a series of numbers, which is how the language models process them.

There are two short courses to check out, taught by Andrew Ng, a wise and seasoned AI educator, and Harrison Chase, founder of LangChain:

Overview of LangChain for app development (here)

Using LangChain to interact with your data and documents (here)

Building a chatbot using the Streamlit front-end (here)

One note of caution. As LangChain was developed after September 2021, asking GPT questions about it is mostly unhelpful. To troubleshoot questions, I needed to use a combination of looking at developer forums and trying a way through by brute force. With most problems in coding, so far, I’ve found that there’s usually a way through, as long as you don’t lose hope, and time is on your hands!

Getting under the hood of the application

There are four main stages to what the app is doing:

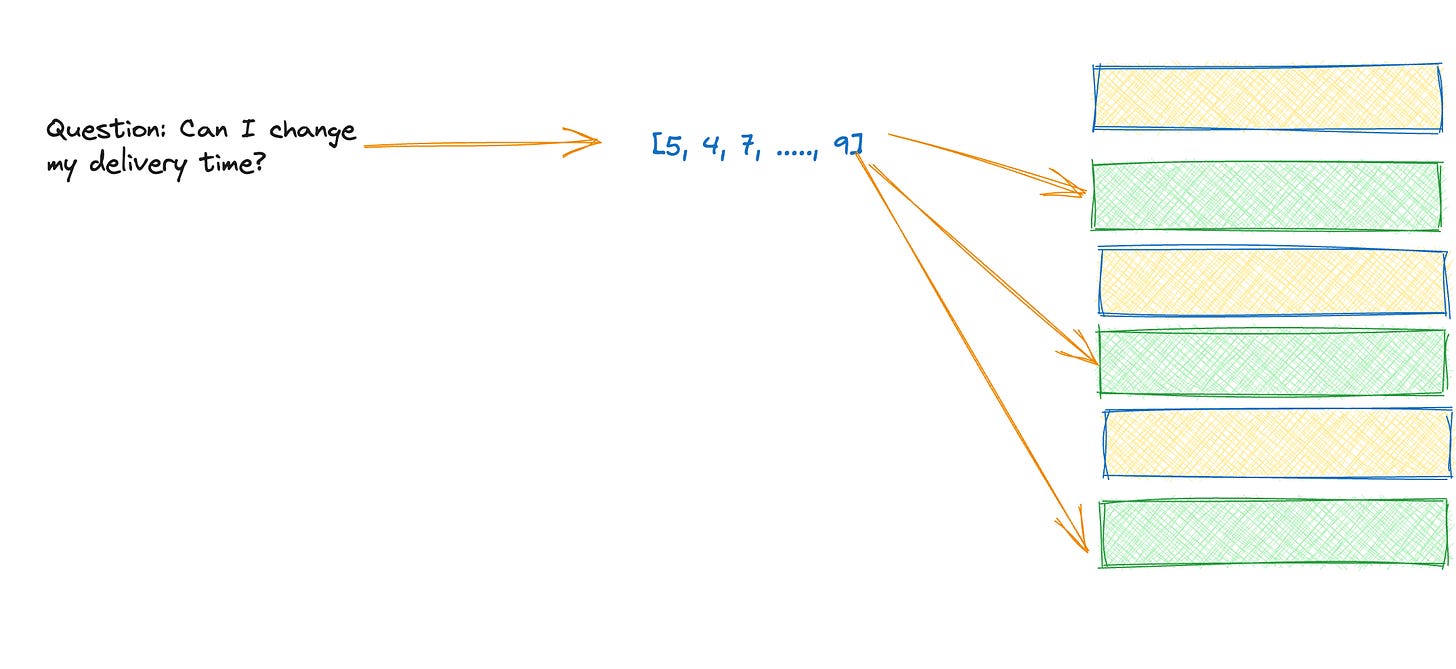

Routing. In response to the customer’s question, GPT uses its language skills to work out the nature of the question. If it’s a question about the estimated delivery date/time, LangChain branches to the relevant routine (and will consult with a database on the time slot). In our case, see diagram below, it’s an FAQ question about changing a delivery time, so LangChain routes us to the relevant routine for that.

Similarity search. GPT converts the customer question into a series of numbers (a “vector”) and searches for similar vectors in the fact-base of FAQs. The latter are stored in a vector database; Pincone is an exmple you may have heard of. These are the facts which have the most simliar “semantic” meaning to the question. By semantic, I mean it won’t necessarily have the same exact wordds as the question, but it should have the same meaning. Maybe the most similar fact speaks about altering your booked slot, which has different words than the question, but a similar meaning.

Synthesizing an answer. Armed with the customer question and the relevant set of facts, GPT can now use its knowledge of language to provide a synthesised answer.

Keeping memory. One other nice feature of LangChain is that you can set it to keep the memory of the conversation in play. So perhaps in your first question you speak about deliveries, and in the follow up question you ask “can I change it?”. Without memory ,the language model would not know what “it” refers to. By turning the memory switch on, we can make the chatbot-customer interaction more fluid and human-like.

Next steps

Within about 2 weeks, I was able to develop a proof of concept, shown below. This is just the start and to get it to Production level, we will need to do three main things:

Building out a fact-base to cover more and more customer questions. Perhaps being able to answer approx 80% would be a good target.

Thorougly test the app.

Tweak and develop based on the results of testing. And continue iterating until we get to an acceptable place.

Takeaways

It’s really easy to set up a chatbot to answer questions on your data and documents

LangChain is a powerful framework for building language-model-powered apps. It can improve by providing better, and more user-friendly documentation. I entered into more than one rabbit hole to solve a problem which I think could have been covered in the LangChain docs and tutorials.

Over time I believe we will see companies shift to this GPT-approach, giving customers a better experience, and moving human customer support people from answering simple question to answering more complex, nuanced ones (that don’t exist in the chatbot’s fact set).

Larger companies may be more hesistant to make the transition and to trust the algorithm, and this could be an opportunity for smaller/medium sized companies to take the initiative, and adopt a slightly more risk taking approach: a good-but-not-perfect response from a chatbot maby be good enough.